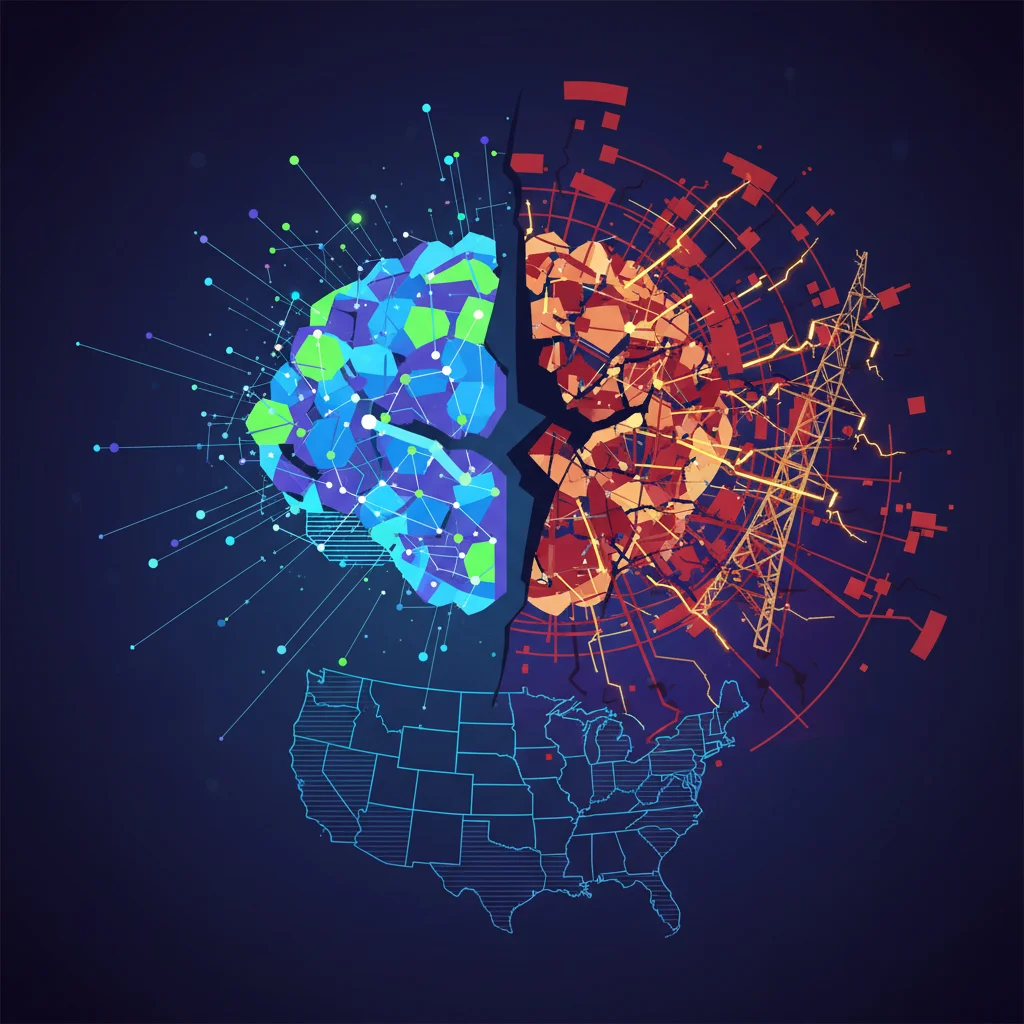

Is America’s Energy Grid Sabotaging Its AI Future?

We are living in the golden age of artificial intelligence. Every day, it feels like a new jaw-dropping model, a groundbreaking piece of software, or a revolutionary SaaS platform is launched. From startups to tech giants, the race to build the next generation of AI is on, and America is, for now, in the lead. We’re acing the algorithms, dominating the programming, and driving global innovation.

But what if the biggest threat to this dominance isn’t a rival algorithm or a foreign tech company, but something far more fundamental? What if it’s the very electricity that powers our digital world? The United States is making a massive, risky bet on a 20th-century energy source—hydrocarbons—to power its 21st-century ambitions. This strategy, as highlighted in a recent Financial Times analysis, could create a critical bottleneck, leading to volatile costs, resource scarcity, and a potential surrender of our lead in the most important technology race of our time.

The Insatiable Appetite of Modern AI

Before we dive into the energy grid, let’s grasp the sheer scale of the power demand. The sophisticated machine learning models that power generative AI are incredibly energy-intensive. Training a single large AI model can consume as much electricity as hundreds of homes for an entire year. And that’s just the training. The real energy sink is “inference”—the process of actually using the AI to generate text, images, or code.

This colossal energy demand is funneled into massive, ever-expanding data centers. These are the physical hearts of the cloud, the engines behind every SaaS application, and the factories of the digital age. As the demand for AI services explodes, so does the need for more data centers, each one a small city in terms of its power consumption. Projections show that by 2026, the AI sector alone could consume up to 134 terawatt-hours (TWh) annually, a figure comparable to the entire yearly consumption of countries like Argentina or Sweden.

This isn’t a distant problem; it’s happening now. Tech giants are scrambling to secure power for their next wave of data centers, sometimes finding that the local grid simply can’t keep up. The success of our entire digital ecosystem, from enterprise automation to consumer apps, rests on a simple, brutal reality: we need more power. A lot more.

TikTok's Ticking Clock: The High-Stakes Tech Chess Match for Its US Future

America’s Hydrocarbon Gamble: A Foundation of Volatility

So, how is the U.S. planning to meet this unprecedented demand? The current strategy leans heavily on natural gas. It’s abundant, relatively cheap (for now), and can be deployed quickly. On the surface, it seems like a pragmatic choice. But dig a little deeper, and you find a foundation built on volatility and hidden costs.

The price of natural gas is notoriously unstable, subject to geopolitical shocks, supply chain disruptions, and weather events. This price volatility translates directly into unpredictable electricity costs for data centers. For a hyperscaler like Google or Amazon, this is a massive financial headache. For the thousands of startups building on their cloud platforms, it’s a potential death sentence. Unpredictable operational costs can destroy a startup’s financial model and stifle innovation before it even gets off the ground.

Furthermore, this hydrocarbon-centric strategy creates a stark contrast with our main competitor in the AI race: China. While the U.S. doubles down on fossil fuels, China is executing a long-term energy strategy built around renewables and nuclear power. They are installing more solar panels than the rest of the world combined and are on a path to have the world’s largest fleet of nuclear reactors. This strategy is designed for one thing: long-term price stability and energy independence. A grid powered by renewables and nuclear offers predictable, low-cost electricity once the initial infrastructure is built—a perfect environment for energy-hungry AI development.

Let’s compare the primary energy sources being considered to power the next generation of data centers:

| Energy Source | Cost Stability | Water Usage | Carbon Footprint | Best For… |

|---|---|---|---|---|

| Natural Gas | Low (Volatile) | High (Thermal Plant) | Medium-High | Meeting immediate demand spikes (peaker plants). |

| Solar & Wind | High (Stable OPEX) | Very Low | Very Low | Low-cost daytime power, but requires storage for 24/7 reliability. |

| Nuclear | Very High (Stable) | High (Thermal Plant) | Very Low | Reliable, 24/7 carbon-free baseload power for massive data centers. |

The Collision Course: When AI’s Thirst Meets a Drying World

The problem isn’t just about electricity; it’s also about water. The connection between energy, water, and computing forms a dangerous triangle, often called the “water-energy nexus.”

- Power Generation: Thermal power plants—which include natural gas, coal, and nuclear facilities—boil water to create steam that turns turbines. This process requires a staggering amount of water for cooling.

- Data Center Cooling: The servers in data centers generate immense heat and must be constantly cooled, another process that is incredibly water-intensive, especially in warmer climates.

This double drain on water resources is creating a direct conflict with another critical sector: agriculture. In states like Arizona, a major hub for data centers, tech companies are competing with farmers for access to dwindling water supplies from sources like the Colorado River. As the FT points out, this isn’t a theoretical risk. It’s a looming crisis that could lead to genuine food insecurity, as water is diverted from growing crops to cooling servers that are training the next viral AI chatbot.

This creates not only an ethical dilemma but also a serious business risk. What happens when a region is hit by a severe drought and local governments are forced to ration water? Will they prioritize farms or data centers? The potential for operational disruption is massive, and it’s a risk baked into the current U.S. strategy of building water-hungry infrastructure in already water-stressed regions.

Beyond the Hype: How Giant Heat Pumps Are a Trojan Horse for AI and Cloud Innovation

Building a Resilient Foundation for the AI Future

So, are we doomed to fall behind? Not necessarily, but it requires a strategic shift away from the short-term convenience of hydrocarbons and toward a more resilient, long-term vision. The path forward involves a multi-pronged approach from policymakers, tech companies, and developers alike.

For Policymakers and the Energy Sector:

The goal must be a modern, stable, and clean energy grid. This means aggressive investment in a diverse portfolio of energy sources. This includes fast-tracking the deployment of next-generation nuclear reactors (like Small Modular Reactors or SMRs) for reliable, carbon-free baseload power, alongside continued expansion of solar, wind, and geothermal. A grid that can provide cheap, stable, and abundant power is the ultimate competitive advantage in the AI era.

For Tech Companies and Innovators:

The industry needs to aggressively pursue efficiency at every level. This includes:

- Hardware Innovation: Designing more energy-efficient chips and servers specifically for AI workloads.

- Sustainable Infrastructure: Pioneering new cooling technologies that use less water, and strategically locating data centers in regions with cool climates and abundant clean energy.

- Software Optimization: Developing more efficient machine learning models and algorithms that deliver the same performance with a smaller computational (and thus energy) footprint.

For Developers and Tech Professionals:

The principle of “green coding” needs to move from a niche interest to a core competency. Writing efficient, optimized code is no longer just about performance; it’s about sustainability and cost. Understanding the energy implications of your code, from a simple script to a complex automation workflow, will become an increasingly valuable skill in a power-constrained world.

Conclusion: The Race is More Than Just Code

The United States is home to unparalleled talent in software development, AI research, and entrepreneurial innovation. We are leading the world in creating the intelligence of the future. But this incredible digital engine is being bolted onto a physical chassis—an energy and water infrastructure—that is becoming increasingly fragile and expensive.

The AI race will not be won by algorithms alone. It will be won by the nation that can build the most robust, efficient, and sustainable physical foundation to support its digital ambitions. A bet on hydrocarbons is a bet on volatility, scarcity, and uncertainty. A bet on a modern, clean, and stable energy grid is a bet on long-term technological supremacy. The choice we make today will determine who powers the future.